This is a ML/Deep Learning and NLP project to train recurrent neural networks to generate Trump Tweets (text).

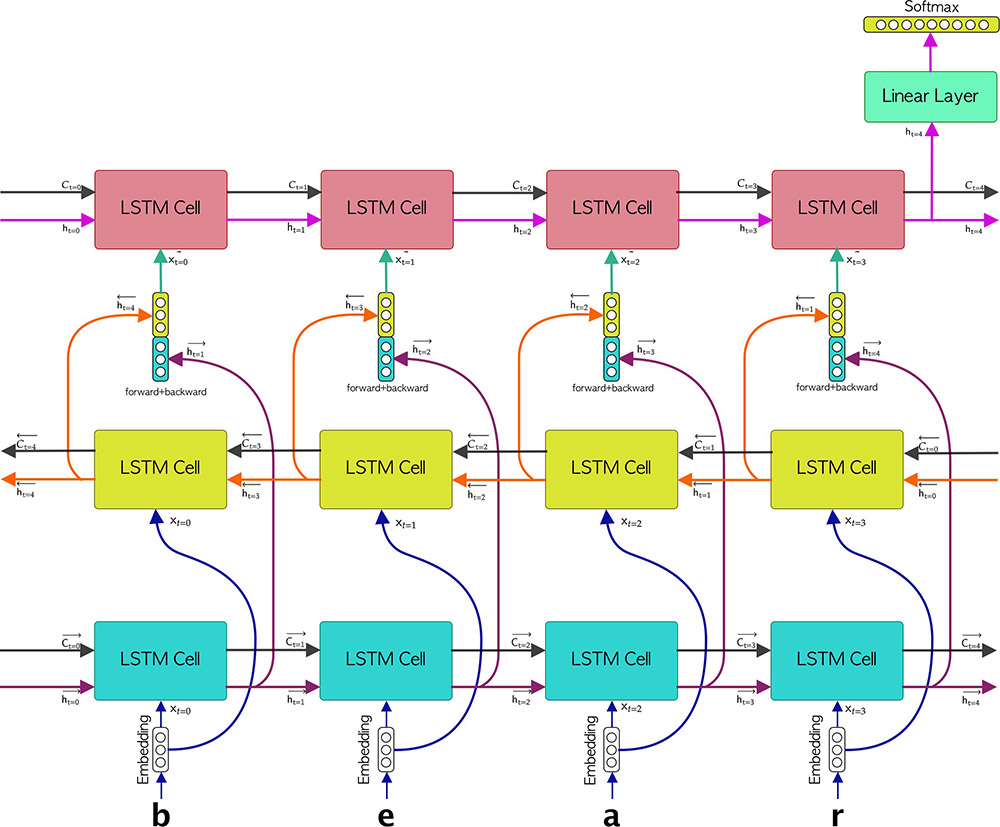

- A Recurent Neural Network with LSTM nodes implementation for text generation, trained on Donald Trump tweets dataset using contextual labels, and can generate realistic ones from random noise.

- Ability to use Bidirectional RNNs, techniques such as attention-weighting and skip-embedding.

- CuDNN implementation for training the RNNs on an nVidia GPU

- This project has been specifically optimised for trump tweet dataset, and generating Trump tweets, but it can be used on any text dataset.

-

Schuster, Mike, and Kuldip K. Paliwal. "Bidirectional recurrent neural networks". IEEE transactions on Signal Processing 45.11 (1997): 2673-2681.

-

Sundermeyer, M., Schlüter, R., & Ney, H. (2012). "LSTM neural networks for language modeling". In Thirteenth annual conference of the international speech communication association.

-

Tang, Jian, et al. "Context-aware natural language generation with recurrent neural networks." arXiv preprint arXiv:1611.09900 (2016).

Clone this repository

Download the dataset (from Resources)

Download the dependencies, and CUDA

Train from train.py# Simple Model Training (train.py)

from model.model import TextGenModel

model_config = {

'name': 'trump_tweet_model',

'meta_token': "<s>",

'word_level': True,

'rnn_layers': 2,

'rnn_size': 512,

'rnn_bidirectional': False,

'max_length': 40,

'max_words': 20000,

'dim_embeddings': 100,

'word_level': True,

'single_text': False

}

trump_tweet_model = TextGenModel(model_config=model_config)

trump_tweet_model.train('trump_tweet_dataset.txt', header=False,

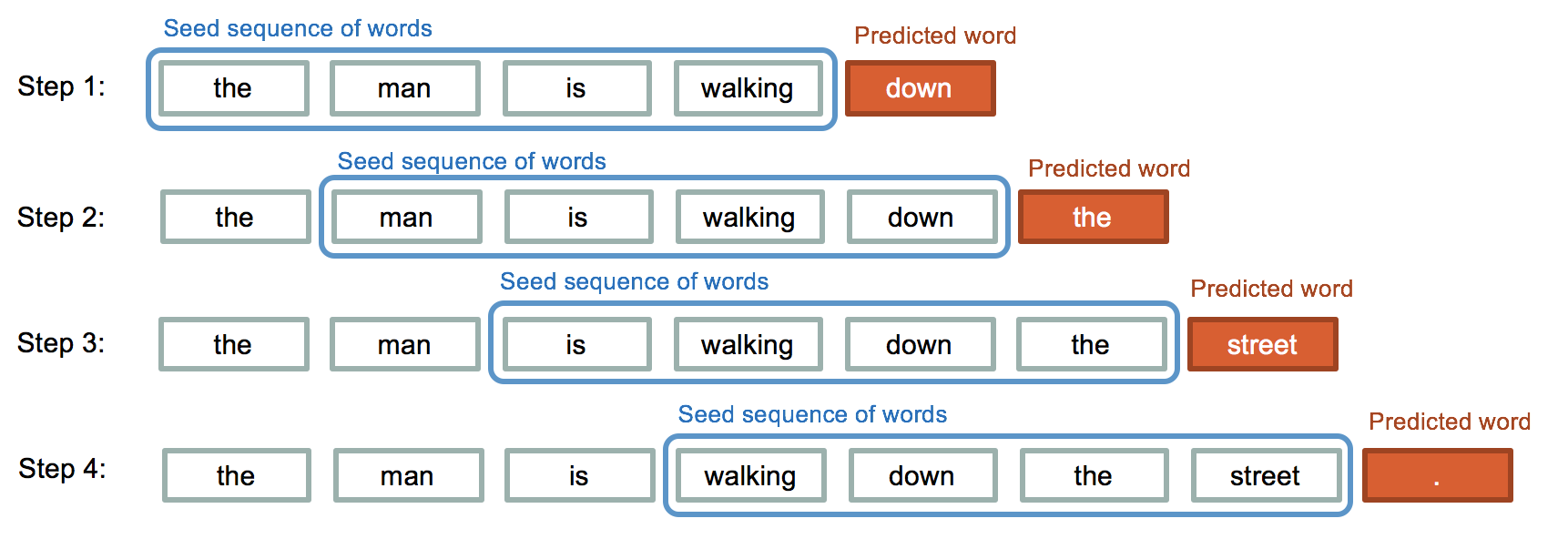

num_epochs=4, new_model=True)- The recurrent neural network takes sequence of words as input and outputs a matrix of probability for each word from dictionary to be the next of given sequence.

- The model also learns how much similarity is between words or characters and calculates the probability of each.

- Using that, it predicts or generates the next word or character of sequence.