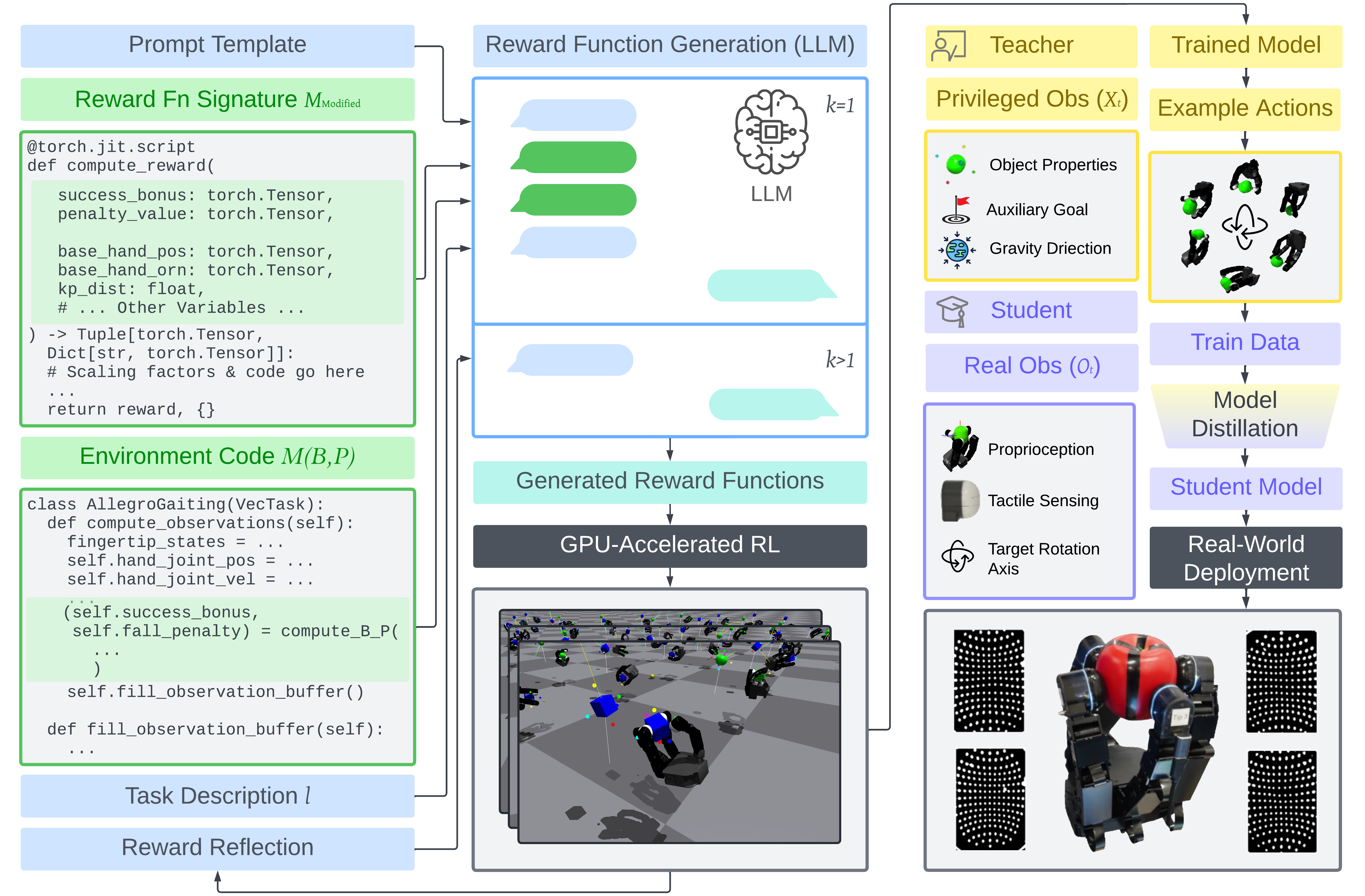

Large language models (LLMs) are begining to automate reward design for dexterous manipulation. However, no prior work has considered tactile sensing, which is known to be critical for human-like dexterity. We present Text2Touch, bringing LLM-crafted rewards to the challenging task of multi-axis in-hand object rotation with real-world vision based tactile sensing in palm-up and palm-down configurations. Our prompt engineering strategy scales to over 70 environment variables, and sim-to-real distillation enables successful policy transfer to a tactile-enabled fully actuated four-fingered dexterous robot hand. Text2Touch significantly outperforms a carefully tuned human-engineered baseline, demonstrating superior rotation speed and stability while relying on reward functions that are an order of magnitude shorter and simpler. These results illustrate how LLM-designed rewards can significantly reduce the time from concept to deployable dexterous tactile skills, supporting more rapid and scalable multimodal robot learning.

Text2Touch requires Python 3.8. We have tested on Ubuntu 22.04.

- Create a new conda environment with:

conda create -n text2touch python=3.8

conda activate text2touch

- Install IsaacGym (tested with

Preview Release 4/4). Follow the instruction to download the package.

tar -xvf IsaacGym_Preview_4_Package.tar.gz

cd isaacgym/python

pip install -e .

(test installation) python examples/joint_monkey.py

- Install Text2Touch

git clone PUT FINAL URL HERE

cd Text2Touch; pip install -e .

cd isaacgymenvs; pip install -e .

cd ../rl_games; pip install -e .

cd ../allegro_gym; pip install -e .

-

Text2Touch currently supports API keys for openai, anthropic, groq, deepseek, mistral, meta, deepinfra, deepseek-api. You can place your key into

text2touch/cfg/config.yaml. -

Update packages

pip install numpy==1.22.0

pip install ollama

sudo apt install gpustat

Navigate to the text2touch directory and run:

python text2touch.py env={environment} iteration={num_iterations} sample={num_samples}

{environment}is the task to perform. Options are listed intext2touch/cfg/env.{num_samples}is the number of reward samples to generate per iteration. Default value is8.{num_iterations}is the number of reward generation iterations to run. Default value is5.

Each run will create a timestamp folder in text2touch/outputs that saves the Text2Touch log as well as all intermediate reward functions and associated policies.

- Copy the contents of your best performing generated env into

allegro_gym/smg_gym/tasks/gaiting/allegro_gaitinggpt.py - Run

sh allegro_gym/smg_gym/scripts/training_script_gpt_s1.shto run full stage 1 training and thensh allegro_gym/smg_gym/scripts/run_ppo_adapt_eval_s1.shfor evaluation. - Run

sh allegro_gym/smg_gym/scripts/run_ppo_adapt_training_s2.shto run stage 2 model distillation to real-world observations and thensh allegro_gym/smg_gym/scripts/run_ppo_adapt_eval_s2.shfor evaluation.

We thank the following open-sourced projects:

- Our base reward function generation code is from Eureka

- Our in-hand manipulation environment and sim2real code is from AnyRotate

This codebase is released under MIT License.

If you find our work useful, please consider citing us!

@inproceedings{

field2025texttouch,

title={Text2Touch: Tactile In-Hand Manipulation with {LLM}-Designed Reward Functions},

author={Harrison Field and Max Yang and Yijiong Lin and Efi Psomopoulou and David A.W. Barton and Nathan F. Lepora},

booktitle={9th Annual Conference on Robot Learning},

year={2025},

url={https://openreview.net/forum?id=U9zcbQVDGa}

}